These codes illustrate how to use domain decomposition with message-passing (MPI). This is the dominant programming paradigm for PIC codes today.

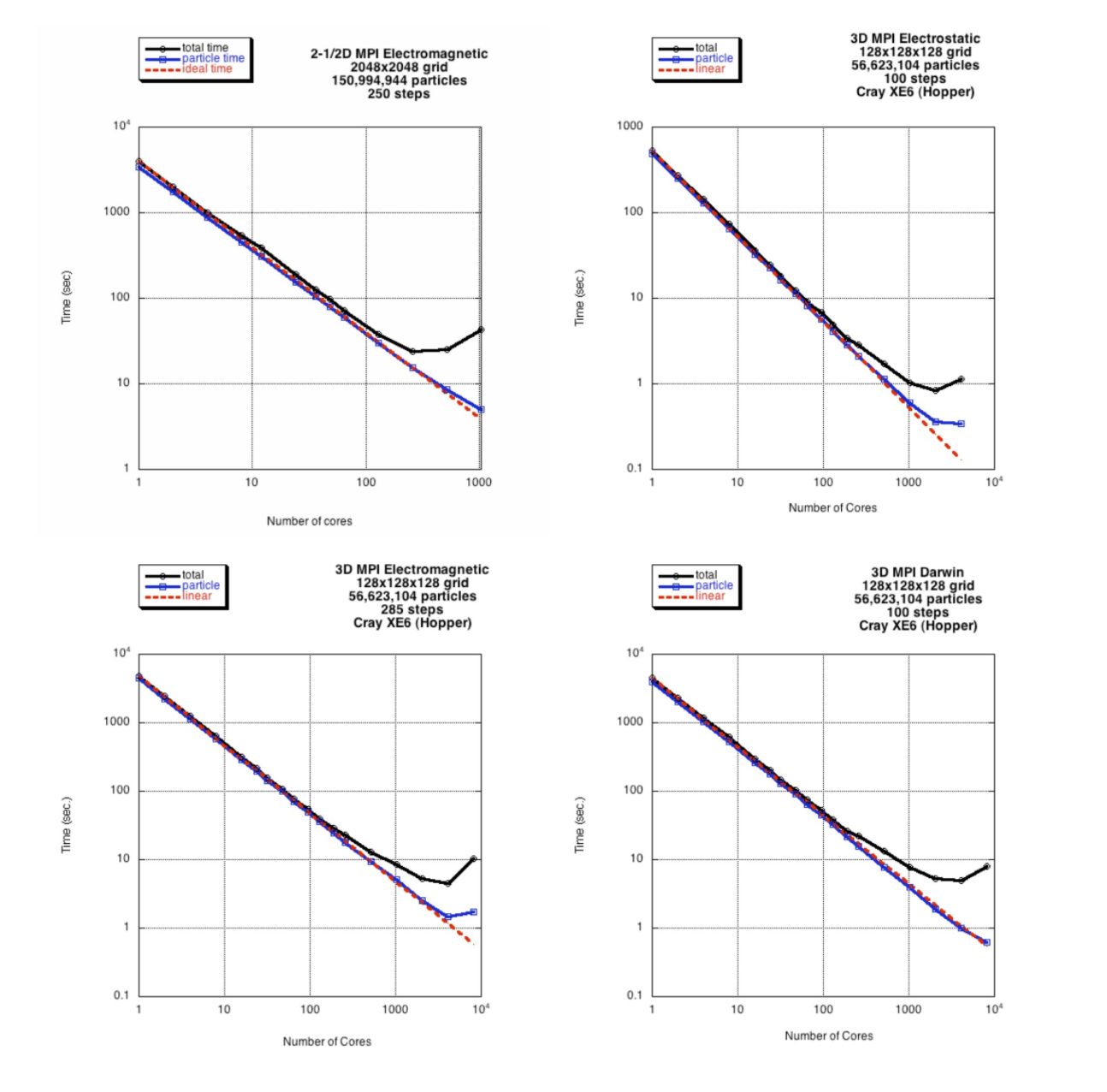

The 2D codes use only a simple 1D domain decomposition. The algorithm is described in detail in Refs. [2-3]. For the 2D electrostatic code, a typical execution time for the particle part of this code is about 140 ps/particle/time-step with 256 MPI nodes. For the 2-1/2D electromagnetic code, a typical execution time for the particle part of this code is about 400 ps/particle/time-step with 256 MPI nodes. For the 2-1/2D Darwin code, a typical execution time for the particle part of this code is about 1.15 ns/particle/time-step with 256 MPI nodes.

The 3D codes use a simple 2D domain decomposition. For the 3D electrostatic code, a typical execution time for the particle part of this code is about 150 ps/particle/time-step with 512 MPI nodes. For the 3D electromagnetic code, a typical execution time for the particle part of this code is about 360 ps/particle/time-step with 512 MPI nodes. For the 3D Darwin code, a typical execution time for the particle part of this code is about 1.0 ns/particle/time-step with 512 MPI nodes.

The CPUs (2.67GHz Intel Nehalem processors) were throttled down to 1.6 GHz for these benchmarks.

Electrostatic:

Electromagnetic:

- 3. 2-1/2D Parallel Electromagnetic Spectral code: pbpic2

- 4. 3D Parallel Electromagnetic Spectral code: pbpic3

Darwin:

Figure details (click to enlarge): Figure 1; Figure 2; Figure 3; Figure 4.

Want to contact the developer? Send mail to Viktor Decyk at decyk@physics.ucla.edu.