New UPIC Releases

The PICKSC group has released updated UPIC codes.

For 1D, the latest code is version UPIC 2.1.0. It uses only OpenMP for parallelization, and it includes a python script. This is the first 1D version of the UPIC codes that has been made available.

For 2D and 3D, the latest version is UPIC 2.0.4.1. In this update, all python scripts have been updated to work with python 2 and 3, and Makefiles have been modified to make them compatible with gfortran 10 (where by default some warnings have become errors). The post-processors in the ReadFiles directories have been reorganized and simplied for the user. In addition, analytic Maxwell solvers have been added which allow the EM codes to run with time steps 2-3 larger than the courant limit. A bug in the velocity space diagnostic was also fixed.

All of these codes may be directly downloaded here on the PICKSC site. The public repository is also available on GitHub and may be found here: UPIC-2.0 (you must be logged onto GitHub for this link to take you to a valid site).

KNL Timings

PICKSC researchers have been updating our software to take full advantage of the many-core Intel Knight’s Landing (KNL) nodes.

An OpenMP 3D electrostatic code from UPIC 2.0 has achieved a performance of 850 psec/particle-step on a single Intel KNL node. On a large memory KNL such as the 96 GB node on the Cori machine at NERSC, a PIC simulation with a billion particles will run in about one second per time step.

A new branch of QuickPIC has also been compiled and run on Cori at NERSC. On a single KNL node with 68 threads, the total time spent on one particle per step is 3.82 ns (including 1 iteration).

Fortran OpenPMD File Writers

We have written an open source program that contains Fortran interfaces for parallel writing of 2D/3D mesh field data and particle data into HDF5 files using the OpenPMD standard.

The software is open source and available on our GitHub repositories here.

New QuickPIC branch

We have added a new branch in our QuickPIC repository (available here).

In addition to the MPI-OpenMP hybrid algorithm, this branch contains new 2D particle subroutines (in source/part2d_lib77.f) using vectorization algorithm.

The vectorization algorithm is originally from the UPIC Skeleton Code. The algorithm in QuickPIC is transformed from the original one to one that solves the Quasi-Static-Approximation PIC model. It also has been modified to enable MPI.

A simple profiling tool is added in the code to show the computing time consumed on the interested procedures. The code can be compiled and run on Cori at NERSC. On a single KNL node with 68 threads, the total time spent on one particle per step is 3.82 ns (including 1 iteration).

First Annual OSIRIS Users and Developers Workshop

On September 18-20, 2017 the first annual OSIRIS Users and Developers Workshop was held at UCLA. The aims of the workshop were to introduce users to the new features and design of our plasma simulation code OSIRIS 4.0, to allow OSIRIS users to share experiences and discuss best practices, to identify useful test and demonstration problems, to discuss how to transition from being a user to an active developer, and to identify areas for near term software improvements and a community strategy for carrying out necessary developments.

There were over 60 attendees from the U.S., Europe, and Asia, with widely ranging experiences and expertise. It was a great success, with many discussions and new collaborations and friendships.

The agenda, as well as copies of many of the talks, can be found on our Presentations page. A summary of the workshop will be posted soon.

UPIC 2.0.2 now public

The PICKSC group has released UPIC 2.0.2, an update to the first release of UPIC (2.0.1). The major new features here are namelist inputs, a full set of field diagnostics, and initialization with non-uniform densities. As before, codes can be compiled to run with 2 levels of parallelism (MPI/OpenMP) or OpenMP only.

The primary purpose of this framework is to provide trusted components for building and customizing parallel Particle-in-Cell codes for plasma simulation. The framework provides libraries as well as reference applications illustrating their use. The public repository is available on GitHub and may be found here: UPIC-2.0 (You must be logged onto GitHub for this links to take you to a valid site).

The files for the 2D and 3D components of UPIC 2.0.2 may also be directly downloaded here on the PICKSC site.

Whistler anisotropy instability study uses PICKSC skeleton code

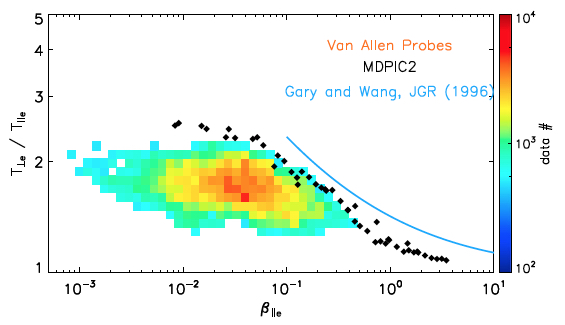

A new article in the Journal of Geophysical Space Research: Space Physics utilized simulations with the 2D darwin OpenMP skeleton code.

An, X., C. Yue, J. Bortnik, V. Decyk, W. Li, R. M. Thorne (2017), On the parameter dependence of the whistler anisotropy instability, J. Geophy. Res. Space Physics, 122, DOI:10.1002/2017JA023895

The evolution of the whistler anisotropy instability relevant to whistler-mode chorus waves in the Earth’s inner magnetosphere was studied using kinetic simulations with mdpic2 and compared with satellite observations.

UPIC 2.0.1 now public

The PICKSC group has released UPIC 2.0.1. The primary purpose of this framework is to provide trusted components for building and customizing parallel Particle-in-Cell codes for plasma simulation. The framework provides libraries as well as reference applications illustrating their use.

The public repository is available on GitHub and may be found here: UPIC-2.0 (You must be logged onto GitHub for this links to take you to a valid site).

The files for the 2D and 3D components of UPIC 2.0.1 may also be directly downloaded here on the PICKSC site.

QuickPIC and OSHUN now public

The PICKSC group has released QuickPIC and OSHUN as open-source codes.

The public repositories are available on GitHub and may be found here: QuickPIC and OSHUN. (You must be logged onto GitHub for these links to take you to a valid site).

QuickPIC is a 3D parallel (MPI & OpenMP Hybrid) Quasi-Static PIC code, which is developed based on the framework UPIC.

OSHUN is a parallel Vlasov-Fokker-Planck plasma simulation code that employs an arbitrary-order spherical harmonic velocity-space decomposition. The public repository currently houses a 1D C++ version and a 1D pure Python version (with optional C-modules for improved performance). A 2D C++ version will be added shortly.

If you have any questions, please contact Weiming An for QuickPIC and Ben Winjum for OSHUN.

PICKSC members present at AAC

PICKSC members recently attended the 17th Advanced Accelerator Concepts (AAC) Workshop held at the National Harbor in Washington DC between July 31 and August 5. Copies of the presentations have not been posted. The PI of PICKSC received the AAC prize “for his leadership and pioneering contributions to the theory and particle-in-cell simulations of plasma based acceleration”. In addition, a PICKSC visiting student from IST in Portugal, Diana Amorim, received a best poster prize for her presentation, “Transverse evolution of positron beams accelerating in hollow plasma channel non-linear wakefields.”

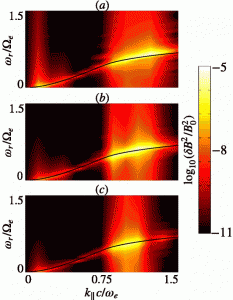

Publication uses PICKSC skeleton code

The first publication based on the skeleton codes recently appeared in Physics of Plasmas.

R. Scott Hughes, Joseph Wang, Viktor K. Decyk, and S. Peter Gary, “Effects of variations in electron thermal velocity on the whistler anisotropy instability: Particle-in-cell simulations,” Physics of Plasmas 23, 042106 (2016). DOI: 10.1063/1.4945748

This work made used of the 2D darwin code mdpic2 and studied whistler wave instabilities for solar wind parameters.

New Coarray Fortran Skeleton Code

Alessandro Fanfarillo and Damian Rouson from Italy have translated the PICKSC skeleton code ppic2 to utilize Coarray Fortran. Coarray Fortran enables a programmer to write parallel programs using a Partitioned Global Address Space (PGAS) scheme. It is part of the Fortran2008 standard and provides an alternative to the dominant Message-Passing Interface (MPI) paradigm.

The code ppic2_caf is available here on the PICKSC site.

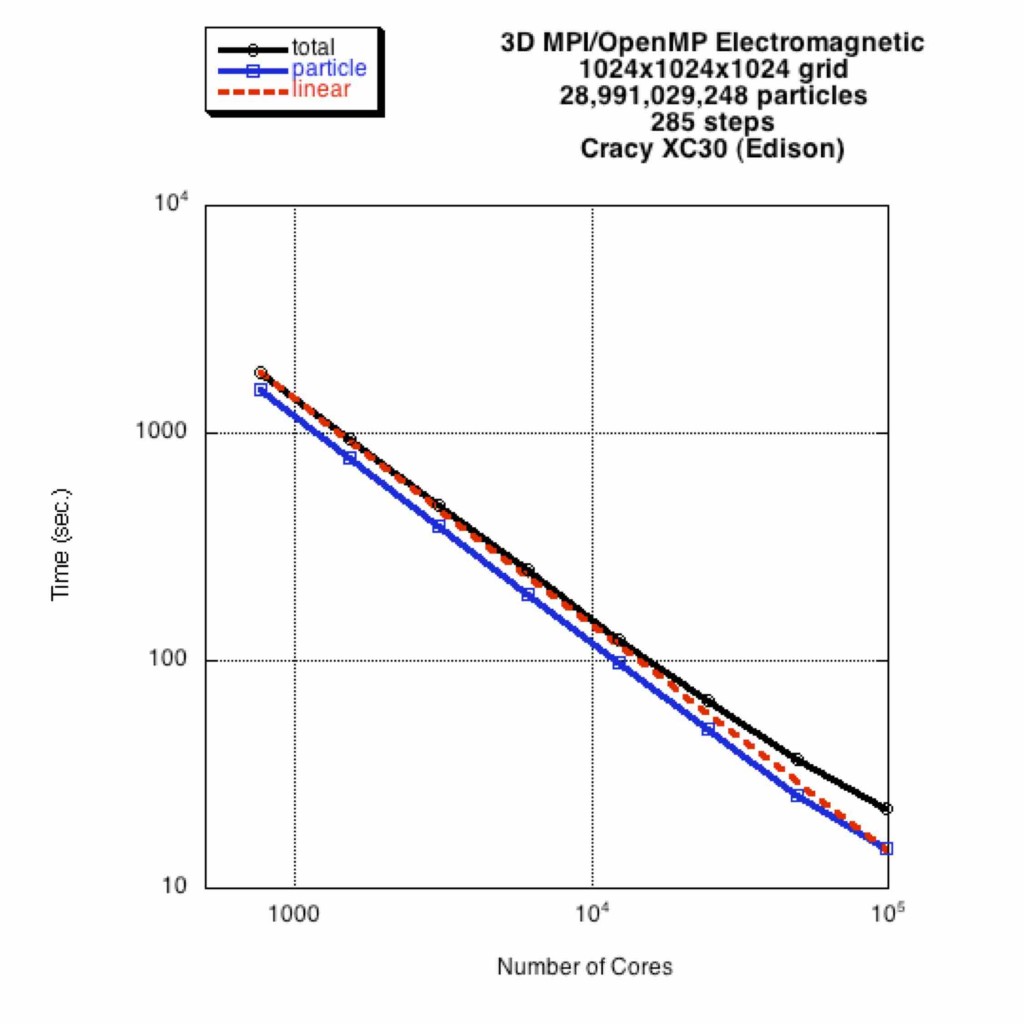

3D OpenMP/MPI code benchmarks

Viktor Decyk has developed fully 3D versions of skeleton PIC codes illustrating the hybrid parallel algorithmic techniques for utilizing both OpenMP and MPI. These skeleton codes have recently been benchmarked on the Edison machine at NERSC.

For the electromagnetic code on a problem size of 1024^3 grids with 27 particles/cell, one can see good scaling up to nearly 100,000 cores, nearly the entire Edison machine. There are 10 FFTs per time step, and the particle time gives about 2 psec/particle/time step at 98,304 cores.

Electrostatic, electromagnetic, and Darwin versions are available. These codes may be accessed here on the OpenMP/MPI skeleton code subpage or at our GitHub repository for skeleton codes.

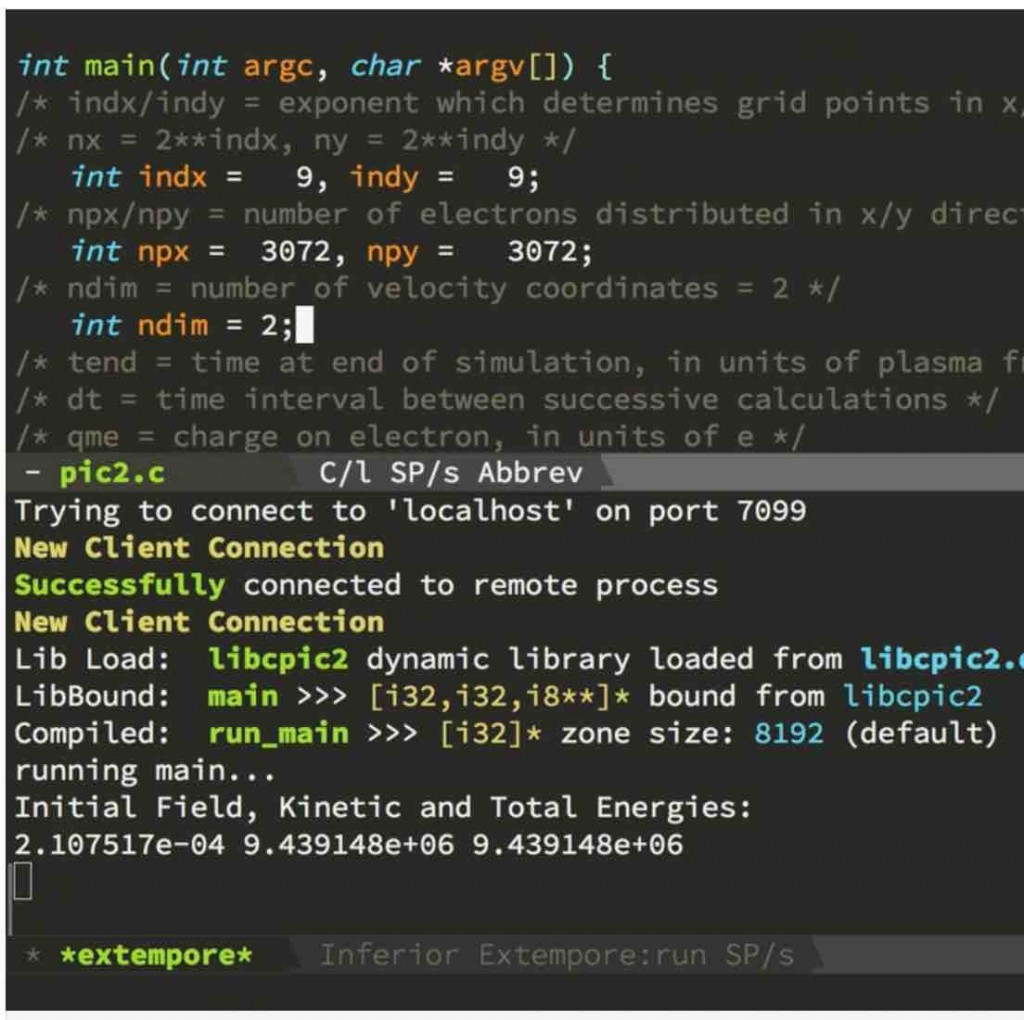

PICKSC codes at SC15

PICKSC’s PIC skeleton codes will be used as a case study for a tutorial on “live programming” at SC15 in Austin, Texas on Nov 15.

For more details see: http://sc15.supercomputing.org/schedule/event_detail?evid=tut145.

Henry Gardner visits PICKSC

Professor Henry Gardner, from the Research School of Computer Science at the Australian National University, visited with Viktor Decyk from May 18-22. They continued their long-standing collaboration on design patterns for scientific programming using the new object-oriented features of Fortran2003. They hope to incorporate these patterns in future versions of the PICKSC production codes.

Henry Gardner is co-author of an advanced textbook on design patterns in scientific programming (book home page and Amazon link).

New video describes activities at UCLA’s Plasma Science and Technology Institute

A new video has been produced that highlights activities at The Plasma Science and Technology Institute at UCLA. This Institute consists of affiliated laboratories and research groups that investigate fundamental questions related plasmas, and it includes the research activities performed by PICKSC scientists.

The areas of study include basic plasma physics, fusion research, space plasmas, laser-plasma interactions, advanced accelerators, novel radiation sources, and plasma-materials processing. Diverse programs encompass experimentation, theory, and computer simulation.

Ben Swift: Live Steering of Parallel PIC Codes

Ben Swift, a Research Fellow in the School of Computer Science at the Australian National University, has been working on a project looking at run-time load balancing and optimisation of scientific simulations running on parallel computing architectures. He chose PICKSC’s Skeleton Codes as a basis for studying live programming workflow.

You can watch a video here: Live programming: bringing the HPC development workflow to life

More about Ben Swift may be found on his webpage.

Skeleton codes are available

We are pleased to announce the availability and full release of a hierarchy of skeleton codes.

Skeleton codes are bare-bones but fully functional PIC codes containing all the crucial elements but not the diagnostics and initial conditions typical of production codes. These are sometimes also called mini-apps. We are providing a hierarchy of skeleton PIC codes, from very basic serial codes for beginning students to very sophisticated codes with 3 levels of parallelism for HPC experts. The codes are designed for high performance, but not at the expense of code obscurity. They illustrate the use of a variety of parallel programming techniques, such as MPI, OpenMP, and CUDA, in both Fortran and C. For students new to parallel processing, we also provide some Tutorial and Quickstart codes.

If you like to register to receive regular software updates, please contact us.

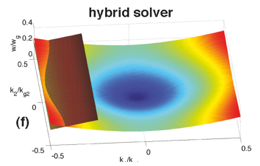

Mitigation of numerical Cerenkov radiation in PIC

UCLA graduate student Peicheng Yu, current PICKSC post-doc Xinlu Xu, and collaborators have been investigating the mitigation of the numerical Cerenkov instability (NCI) which occurs when a plasma drifts near the speed of light in a PIC code. In a series of papers (references below) they have developed a general theory as well as mitigation strategies for fully spectral (FFT based) and hybrid (FFT and finite difference) solvers. Recently they published an article in Communications in Computer Physics [1] and posted a new article on the arxiv: arXiv:1502.01376 [physics.comp-ph]. If you would like more information, please contact Mr. Peicheng Yu at tpc02@ucla.edu.

References

1. P. Yu, X. Xu, V. K. Decyk, F. Fiuza, J. Vieira, F. S. Tsung, R. A. Fonseca, W. Lu, L. O. Silva, W. B. Mori, “Elimination of the numerical Cerenkov instability for spectral EM-PIC codes.” COMPUTER PHYSICS COMMUNICATIONS 192, 32 (2015). doi link

2. P. Yu, X. Xu, V. K. Decyk, W. An, J. Vieira, F. S. Tsung, R. A. Fonseca, W. Lu, L. O. Silva, W. B. Mori, “Modeling of laser wakefield acceleration in Lorentz boosted frame using EM-PIC code with spectral solver.” JOURNAL OF COMPUTATIONAL PHYSICS 266, 124 (2014). doi link

3. X. Xu, P. Yu, S. F. Martins, F. S. Tsung, V. K. Decyk, J. Vieira, R. A. Fonseca, W. Lu, L. O. Silva, W. B. Mori, “Numerical instability due to relativistic plasma drift in EM-PIC simulations.” COMPUTER PHYSICS COMMUNICATIONS 184, 2503 (2013). doi link

4. P. Yu, et al., in Proc. 16th Advanced Accelerator Concepts Workshop, San Jose, California, 2014.

5. P. Yu, X. Xu, V. K. Decyk, S. F. Martins, F. S. Tsung, J. Vieira, R. A. Fonseca, W. Lu, L. O. Silva, and W. B. Mori, “Modeling of laser wakefield acceleration in the Lorentz boosted frame using OSIRIS and UPIC framework,” A IP Conf. Proc. 1507, 416 (2012). doi link

Implementation of a Quasi-3D algorithm into OSIRIS

PICKSC member Asher Davidson has recently published an article in the Journal of Computational Physics detailing the implementation of a quasi-3D algorithm into OSIRIS.

For many plasma physics problems, three-dimensional and kinetic effects are very important. However, such simulations are very computationally intensive. Fortunately, there is a class of problems for which there is nearly azimuthal symmetry and the dominant three-dimensional physics is captured by the inclusion of only a few azimuthal harmonics. Recently, it was proposed [1] to model one such problem, laser wakefield acceleration, by expanding the fields and currents in azimuthal harmonics and truncating the expansion. The complex amplitudes of the fundamental and first harmonic for the fields were solved on an r–z grid and a procedure for calculating the complex current amplitudes for each particle based on its motion in Cartesian geometry was presented using a Marder’s correction to maintain the validity of Gauss’s law. In this paper, we describe an implementation of this algorithm into OSIRIS using a rigorous charge conserving current deposition method to maintain the validity of Gauss’s law. We show that this algorithm is a hybrid method which uses a particles-in-cell description in r–z and a gridless description in ϕ. We include the ability to keep an arbitrary number of harmonics and higher order particle shapes. Examples for laser wakefield acceleration, plasma wakefield acceleration, and beam loading are also presented and directions for future work are discussed.

References

A. Davidson, et. al., J. Comp. Phys. 281,1063 (2015).

NSF highlights plasma-accelerator PIC simulations

NSF has highlighted research done by QuickPIC for the FACET simulations. See link here.

Article excerpt: 'In recent years, however, scientists experimenting with so-called "plasma wakefields" have found that accelerating electrons on waves of plasma, or ionized gas, is not only more efficient, but also allows for the use of an electric field a thousand or more times higher than those of a conventional accelerator.

'And most importantly, the technique, where electrons gain energy by "surfing" on a wave of electrons within the ionized gas, raises the potential for a new generation of smaller and less-expensive particle accelerators.

' "The big picture application is a future high energy physics collider," says Warren Mori, a professor of physics, astronomy and electrical engineering at the University of California, Los Angeles (UCLA), who has been working on this project. "Typically, these cost tens of billions of dollars to build. The motivation is to try to develop a technology that would reduce the size and the cost of the next collider." '

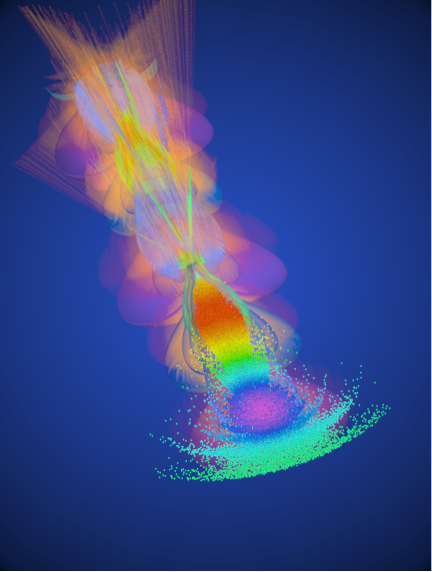

QuickPIC makes the Nature cover

Breakthrough in plasma-based accelerator research is facilitated using QuickPIC, a code based on the UPIC Framework.

Recently, a team of researchers from SLAC and UCLA demonstrated a milestone in plasma based accelerator research. Using two properly space electron bunches, they were able to demonstrate efficient transfer of energy from a drive electron beam to a second trailing electron beam.

The planning and interpretation of the experiment relied on QuickPIC as well as OSIRIS.

Nature article: M. Litos et al., Nature 515, 92 (2014).

General science write-up in Nature News and Views: Accelerator physics: Surf’s up at SLAC.

More links: Nature

- Nature (Vol. 515, Num. 7525)

- Nature (direct link to paper)

- Nature New & Views (Mike Downer)

- http://www.nature.com/nature/journal/v515/n7525/full/515040a.html

- Nature Podcast (includes actual audio interview)

SLAC

"Hard" Science Media

Mainstream Media, Science and Tech Blogs, etc.

- LA Times

- NBC News

- io9

- Yahoo News

- Huffington Post

- Ars Technica

- Vice – Motherboard

- Gizmodo

- IFLscience

- LiveScience (article)

- LiveScience (video)

- The Conversation

- Wallstreet OTC

- Voice of America

- Maine News Online

- HNGN

- Red Orbit

- Sci-Tech Today

- Science 2.0

- Extreme Tech

- Tech Times

- Tech Fragments

- R&D Magazine

- Overclockers Club

- Science Codex

- ECN

- Engineering.com

- Laboratory Equipment

- Semiconductor Engineering

- Scientific Computing

International Media

- Ciencia Plus (Spain)

- Media INAF (Italy)

- Repubblica (Italy)

- Welt der Physik (Germany)

- ANSA (Italy)

- Tiscali (Italy)

- Spektrum (Germany)

- Pro-Physik (Germany)

- Wired (Italian version)

- Golem (Germany)

- VESTI (Russia)

OSIRIS is now GPU and Intel Phi enabled

PICKSC members, notably Adam Tableman, Viktor Decyk, and Ricardo Fonseca, have developed strategies for porting PIC codes to many-core architectures including GPUs and Intel Phi processors. Some of these ideas have been implemented into OSIRIS so that it is GPU and Intel Phi enabled.

The GPU version of OSIRIS is fully operational in one, two and three dimensions, with support for most of the features of OSIRIS. Dynamic GPU load balancing/tuning is included and the code is fully MPI ready and capable of running on thousands of GPU nodes, with tailored support for Fermi and Kepler generations.

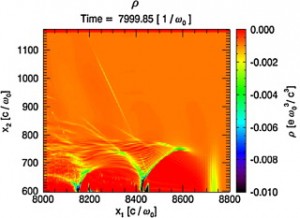

PICKSC members get INCITE award

90 million processor hours on the IBM Blue Gene/Q Machine at Argonne National Laboratory have been awarded to Frank Tsung (PI), Warren Mori (Co-PI), Ben Winjum, and Viktor Decyk as a 2015 Incite Award for "Petascale Simulations of Laser Plasma Interactions Relevant to IFE".

The Research Summary for the Award: Inertial (laser-initiated) fusion energy (IFE) holds incredible promise as a source of clean and sustainable energy for powering devices. However, significant obstacles to obtaining and harnessing IFE in a controllable manner remain, including the fact that self-sustained ignition has not yet been achieved in IFE experiments. This inability is attributed in large part to excessive laser-plasma instabilities (LPIs) encountered by the laser beams.

LPIs such as two-plasmon decay and stimulated Raman scattering can absorb, deflect, or reflect laser light, disrupting the fusion drive, and can also generate energetic electrons that threaten to preheat the target. Nevertheless, IFE schemes like shock ignition (where a high-intensity laser is introduced toward the end of the compression pulse) could potentially take advantage of LPIs to generate energetic particles to create a useful shock that drives fusion. Therefore, developing an understanding of LPIs will be crucial to the success of any IFE scheme.

The physics involved in LPI processes is complex and highly nonlinear, involving both wave- wave and wave-particle interactions and necessitating the use of fully nonlinear kinetic computer models, such as fully explicit particle-in-cell (PIC) simulations that are computationally intensive and thus limit how many spatial and temporal scales can be modeled.

By using highly optimized PIC codes, however, researchers will focus on using fully kinetic simulations to study the key basic high energy density science directly relevant to IFE. The ultimate goal is to develop a hierarchy of kinetic, fluid, and other reduced-description approaches that can model the full space and time scales, and close the gap between particle- based simulations and current experiments.

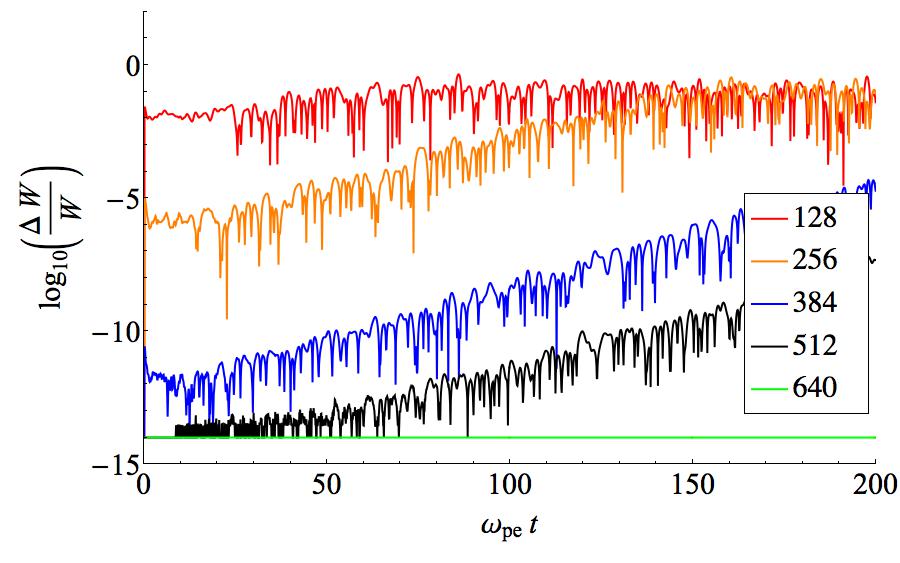

Verification and Convergence Properties of Particle-in-Cell Codes

Despite the wide use of PIC codes throughout plasma physics for over 50 years, there still does not appear to be a consensus on the mathematical model that PIC codes represent. PICKSC researchers have recently found that a conventional spectral PIC code can be shown to converge to a spectral gridless code with finite-size particles, indicating that the appropriate underlying model of PIC codes is the Klimontovich equation with finite-size particles as opposed to the Vlasov equation or a statistical model such as the Vlasov-Boltzmann equation.

Using gridless codes developed by Viktor Decyk for both electrostatic and electromagnetic cases, the energy evolution of a 1D, periodic, thermal plasma was shown to converge exactly (within machine precision) as the number of Fourier modes, the particle size, and the time step were varied. Following this, the researchers compared a conventional spectral PIC code to the gridless code, showing the convergence of electrostatic and electromagnetic PIC codes to the gridless code as the cell size was varied and the particle size was kept constant. They further verified conditions for which electron plasma waves had the proper dispersion relation. Interestingly, these convergence tests suggested that when using PIC codes with Gaussian-shaped particles, convergence occurred when using grid sizes less than half the electron Debye length and a particle size of approximately one Debye length, contrasting slightly with conventional PIC usage of equal grid sizes and particles sizes.

References

[B. J. Winjum, J. J. Tu, S. S. Su, V. K. Decyk, and W. B. Mori, “Verification and Convergence Properties of a Particle-In-Cell Code”, to be published.]

PICKSC members get BlueWaters access

The UCLA Simulation of Plasmas Group and the OSIRIS Consortium have been given access to Blue Waters, one of the most powerful supercomputing machines in the world. Blue Waters is supported by the National Science Foundation and the University of Illinois at Urbana-Champaign, and it is managed by the National Center for Supercomputing Applications. The UCLA group hopes to use their access to investigate scientific questions about inertial fusion energy, plasma-based acceleration, energetic particle generation in the cosmos, and magnetotail substorms.

Dr. Tsung’s presentation for the group at the 2014 Blue Waters Symposium may be viewed here.

PICKSC hosts first annual workshop

The UCLA Particle-in-Cell (PIC) and Kinetic Simulation Software Center (PICKSC) hosted a workshop on enabling software interoperability within the PIC community. We invited the primary developers of about a dozen major PIC codes used in the study of Laser Plasma Interactions (LPI), as well as a few developers from other areas. The LPI community shares intellectual ideas about simulations effectively, but has rarely shared the software itself. There is no large community code. Almost all the developers we invited accepted, indicating a strong interest in this topic.

The workshop was held at UCLA from Sept 22-24, 2014. The four sessions were primarily organized as discussions, with

short presentations to add additional material. The first major topic was whether interoperability was desired and what

it actually means. There was agreement on a wide number of issues:

- 1. The desire for a code of ethics, acknowledgment when code is reused. This could be an acknowledgement in a publication, references to papers, or authorship in a publication. The latter might be appropriate if a shared code enabled new research capability.

- 2. Desire for standard problems to verify or validate new codes or modified codes, including a database of physics benchmarks with standard inputs. One would like to easily reproduce the results of a paper, in hours, not months, with an independently developed code.

- 3. Desire for common display formats.

- 4. Interoperability of software may be enabled via middleware, with simple interfaces.

- 5. Desire for workflow interoperability between different codes, using output of one code as input to another.

The second major topic was how to enable software interoperability. The attendees discussed and compared units, data structures and objects used in the various codes. Two languages were in common use in the community, Fortran and C/C++. Scripting languages (often Python) was sometimes used to glue components together. Fortran2003 has standard interoperability with C which simplifies language interoperability. There were two common types of units in use, dimensionless units and SI. Dimensionless units are used by those who adhere to the philosophy that a simulation represents many actual physical systems. Translating units is generally straightforward, but can be tricky since not everything is well documented. Some codes had public units for input/output but different units internally. Among object-oriented codes, there was a wide variety of classes with different dependences. It was felt that only simple objects could actually interoperate at this time. Different parallel domain decompositions used in the code could also pose a problem, but this was not extensively discussed.

The third major topic was how to enable interoperability of algorithms. There was a consensus that providing a simple unit test for each new algorithm, which compares the algorithm with some analytic solution and could be run and executed independently of the actual PIC code. The use of skeleton codes (or mini-apps) to illustrate how a collection of algorithms interoperate was also discussed. There was a consensus that PICKSC can serve as a focal point of PIC codes containing pointers between various codes in the community.